Human-Computer Interaction & Information Visualization

Off-Screen Desktop — Master's Thesis

A Spatial & Multi-Touch

Interaction System That Supports

the Manipulation of Off-Screen Content

September 2014 – August 2015

Technologies: Java, Processing, Leap Motion

Lab Project Page: https://vialab.ca/research/off-screen-desktop

Spatial Peripheral Interaction Techniques for Viewing and Manipulating

Off-Screen Digital Content

Master's Thesis — University of Ontario Institute of Technology

Patents

System and Method For Spatial Interaction For Viewing and Manipulating

Off-Screen Content

United States Patent Application No. 15/427,631

Canadian Patent Application No. 2,957,383

Contributions

Contributions

- A formalized descriptive framework of the off-screen interaction space that divides the around-device space into separate interaction volumes.

- The design of spatial off-screen navigation techniques that enable one to view and interact with off-screen content.

- The design and implementation of Off-Screen Desktop, an off-screen interaction system, along with several use case prototypes.

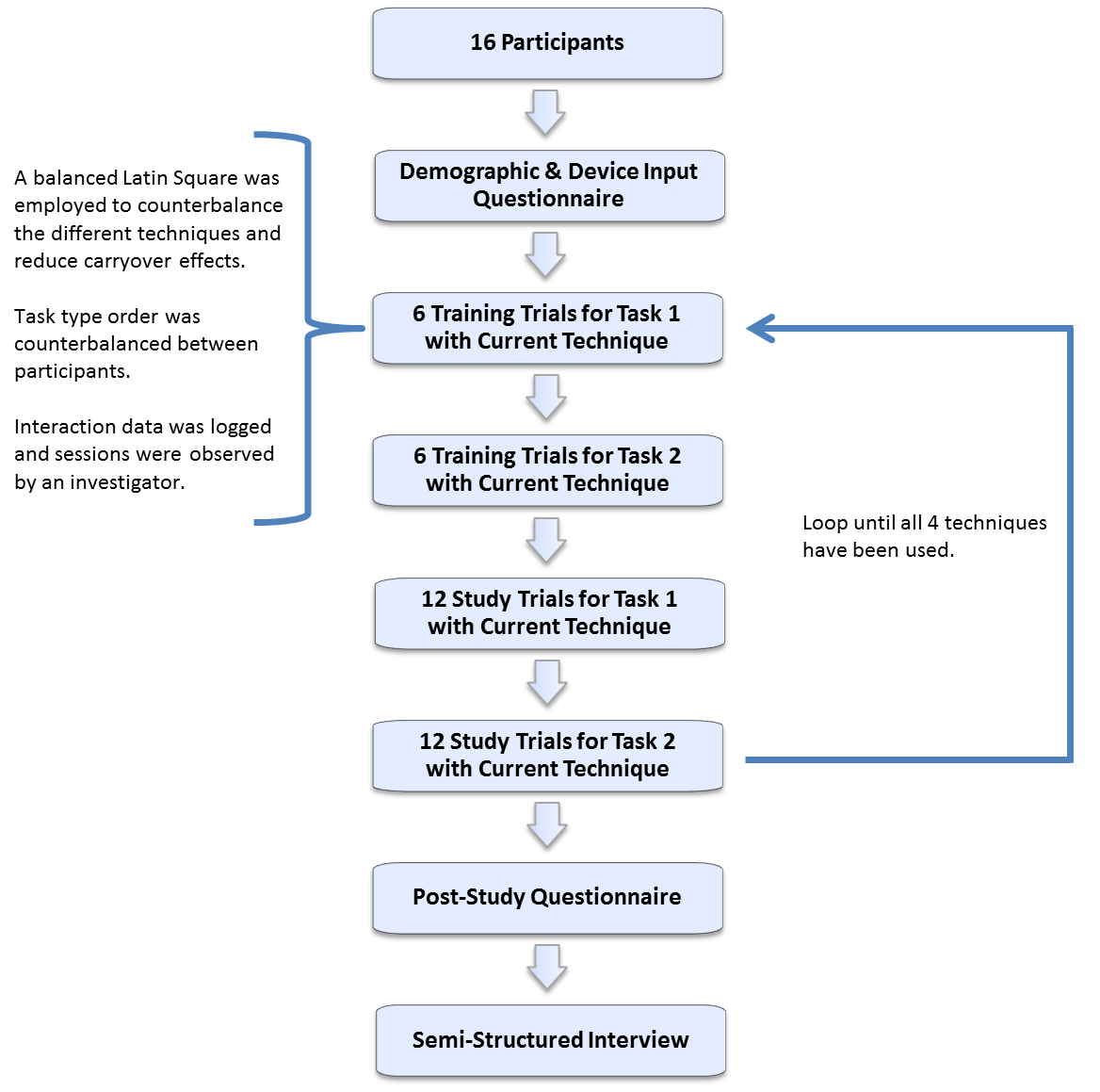

- The results from a comparative evaluation between three of my spatial off-screen navigation techniques and traditional mouse panning using a 2x2x4 factorial within-subjects study design.

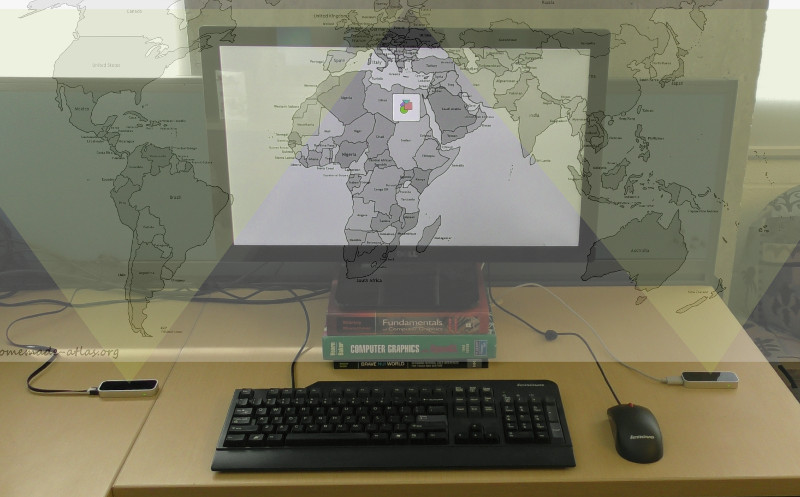

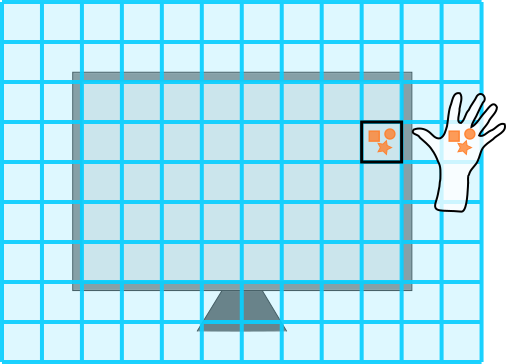

For my thesis, I designed a set of spatial interaction techniques

that allows one to explore, navigate, and directly manipulate an

information space (eg. desktop, map, blue grid in image) that is

larger than the display. By treating the information space as if it

extended past the boundaries of the screen, users can place their

hand beside the display to view off-screen content at that location,

as well a directly manipulate it with a spatial selection technique

(eg. tap).

To accomplish this, the techniques geometrically

transform (eg. scale, translate) the visual presentation of the

information space without affecting its spatial interaction space.

Direct manipulation is supported by employing a direct 1:1 mapping

between the physical world (motor space) and the information space.

Other mappings are supported as well, including ones that take into

account the biomechanical properties of the human arm and the

overall size of the information space.

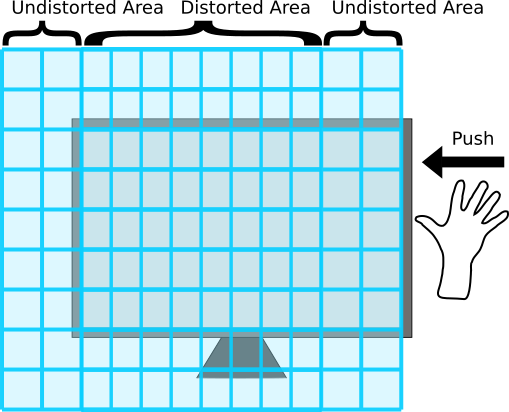

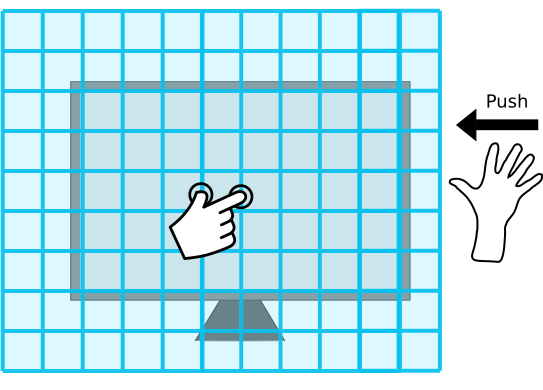

Paper Distortion

The Paper Distortion technique employs a paper pushing metaphor to

display off-screen content. If we imagine the 2D information space

as a sheet of paper that is larger than the display, the user can

push the paper from the side towards the display to bring off-screen

content into the viewport. This causes the section of paper that is

over the screen to crumple (distort); therefore creating enough room

for the off-screen content by only scaling down the original

on-screen content.

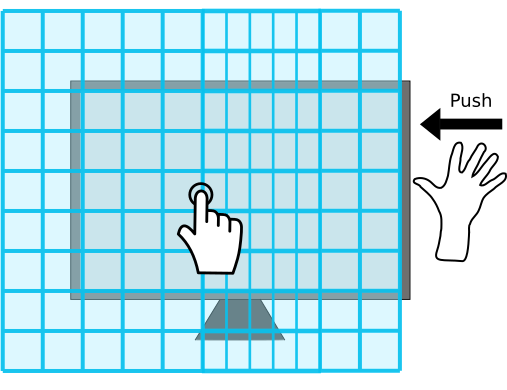

Instead of distorting all of the content on-screen, the user can

touch a location on the display whilst performing the pushing

gesture to define a starting point of the distortion. The end point

will automatically be the closest on-screen location to the

performed push gesture. For example, if one pushes horizontally from

the right side and touches the middle of the screen, then only

on-screen content that is on the middle right side of the display

will become distorted.

Similarly, the user has the option to define the end

point of the distortion region as well. By touching two locations

with one hand and performing the push gesture, only content within

those two locations will become distorted.

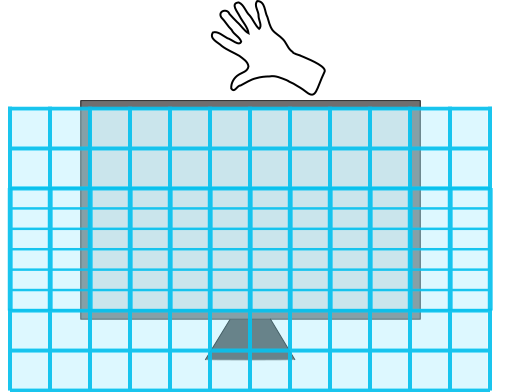

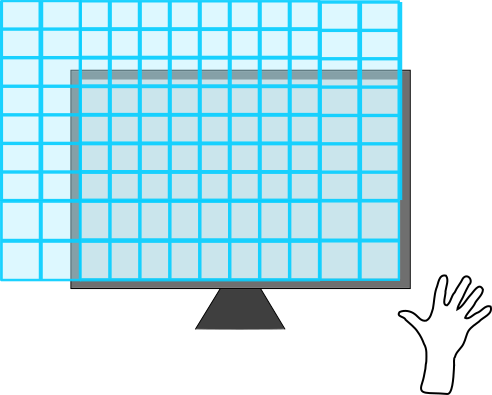

Dynamic Distortion

With Dynamic Distortion the user is able to continuously change the

amount of distortion by adjusting his hand location in relation to

the side of the display. To invoke this technique, the user only has

to place their hand in an off-screen area. By moving one’s hand

further away from the side of the display, the amount of distortion

increases since more of the off-screen information space needs to

fit on-screen.

To be able to view off-screen content past the corner of the

display, the on-screen content is distorted horizontally and

vertically.

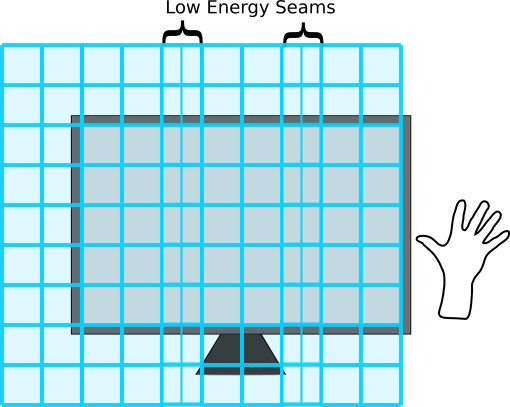

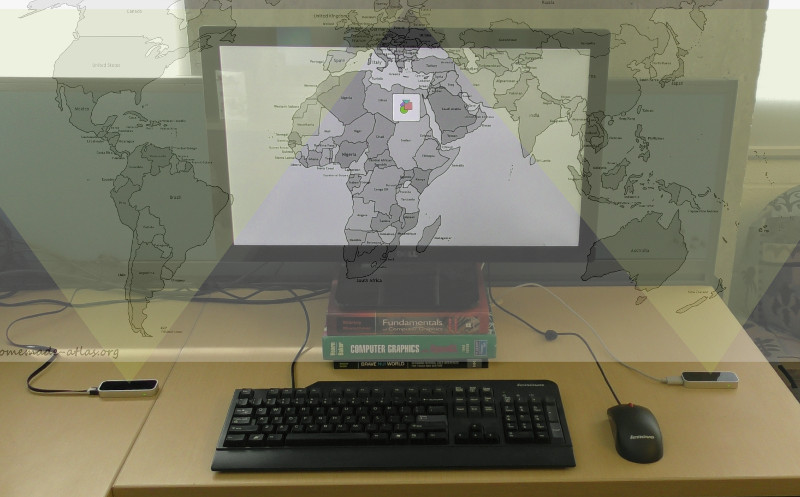

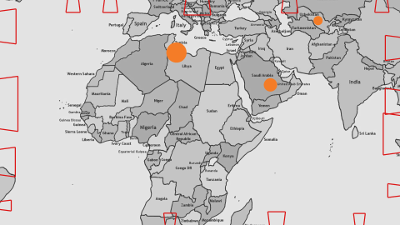

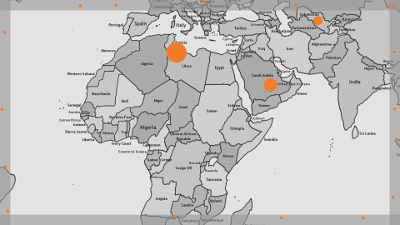

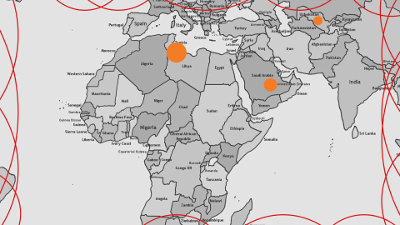

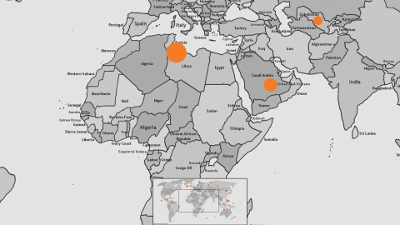

Content-Aware Distortion

To provide further control on how Paper and Dynamic Distortion

transform the information space, I developed Content-Aware

Distortion. This transformation technique takes into account the

energy or importance of pixels to minimize the loss of important

information when distorting an information space. Regions with a

high amount of energy are only translated, whereas regions with a

low amount of energy are distorted to make room for off-screen

content. For example in a map-based interface, if oceans had low

energy and continents had high energy, then this technique would

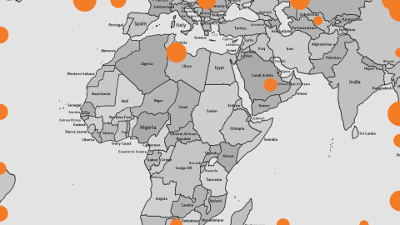

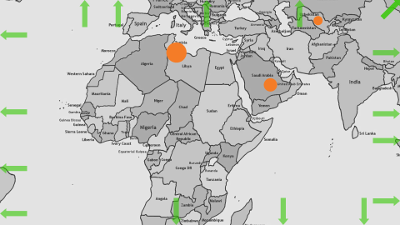

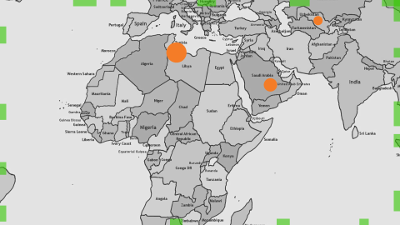

only distort the oceans. In the image below, Paper Distortion

combined with Content-Aware Distortion is used to bring portions of

Russia and the Middle East on-screen by distorting a large section

of the Atlantic Ocean to less than a pixel wide.

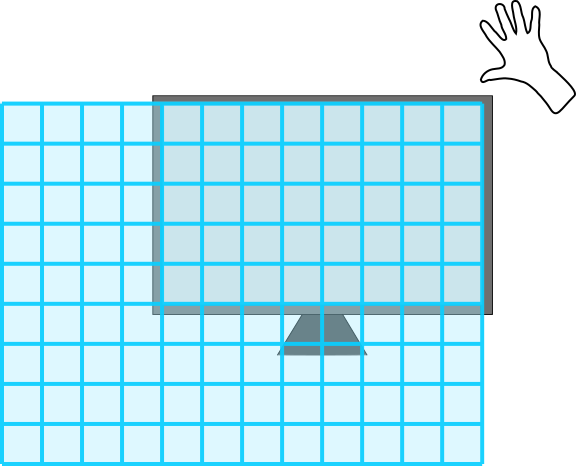

Dynamic Peephole Inset

The Dynamic Peephole Inset technique displays off-screen content

that is located underneath the user’s hand in an inset/viewport.

This viewport is situated on-screen at the edge of the display with

many options for its exact placement. The viewport can be fixed at

the closest corner to the hand, the centre of the closest edge, or

continuously follow the location of the hand.

Point2Pan

Point2Pan is a ray-casting technique similar to the ones designed

for interacting with distant objects in 3D environments. When a user

points to a section of the information space that lies off-screen,

the system translates (pans) the information space to show this

section on-screen. The user can then manipulate the content using

touch or the mouse for example, or end the pointing gesture to

translate the information space back to its original location. This

allows one to quickly explore the surrounding off-screen area

without much physical effort.

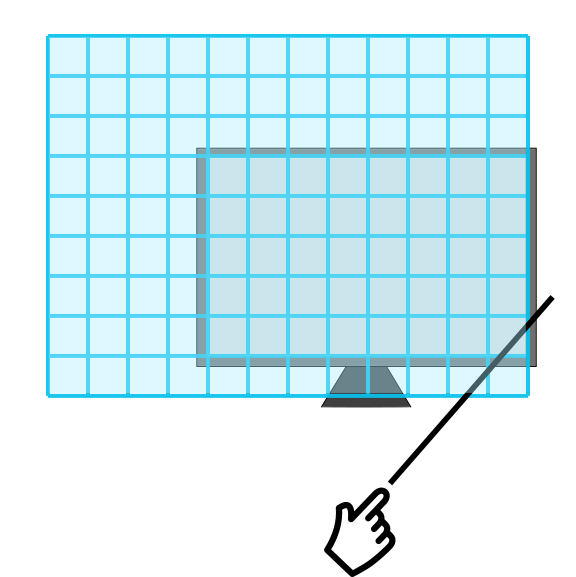

Spatial Panning

The Spatial Panning technique translates (pans) the information

space to show off-screen content. By directly placing one’s hand in

the information space that resides off-screen, the system will

translate the environment to show on-screen the information space

that is located at the position of the user’s hand. For example, on

the right side of the screen, the vertical panning amount can be

calculated based on the distance between the hand and the vertical

centre of the screen. Similarly, the horizontal panning amount can

be calculated based on the distance between the hand and right side

of the screen.

To be able to unleash content from the boundaries of the display into the surrounding space, I created Off-Screen Desktop. It is a multimodal 2D zoomable user interface (ZUI) that enables the manipulation of on-screen and off-screen objects. Off-Screen Desktop integrates all of the aforementioned off-screen navigation techniques with support for spatial, mouse and touch-based interaction. To build Off-Screen Desktop, I employed a multi-touch monitor along with two motion sensors (Leap Motion).

To provide the user with knowledge of off-screen content without requiring one to explore the off-screen information space, I integrated various off-screen visualization techniques from the human-computer interaction/information visualization literature into the system.

Wedge: Clutter-free visualization of off-screen locations. Gustafson, S., Baudisch, P., Gutwin, C., and Irani, P. (2008). In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’08.

Comparing visualizations for tracking off-screen moving targets. Gustafson, S. G. and Irani, P. P. (2007). In CHI ’07 Extended Abstracts on Human Factors in Computing Systems, CHI EA ’07.

Halo: A technique for visualizing off-screen objects. Baudisch, P. and Rosenholtz, R. (2003). In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’03.

A review of overview+detail, zooming, and focus+context interfaces. Cockburn, A., Karlson, A., and Bederson, B. B. (2009). ACM Comput. Surv.

Visualization of off-screen objects in mobile augmented reality. Schinke, T., Henze, N., and Boll, S. (2010). In Proceedings of the 12th International Conference on Human Computer Interaction with Mobile Devices and Services, MobileHCI ’10.

City lights: Contextual views in minimal space. Zellweger, P. T., Mackinlay, J. D., Good, L., Stefik, M., and Baudisch, P. (2003). In CHI ’03 Extended Abstracts on Human Factors in Computing Systems, CHI EA ’03.

Desktop

My desktop application is shown here with a before and after sequence of its information space being distorted to view the Twitter feed that is located off-screen.

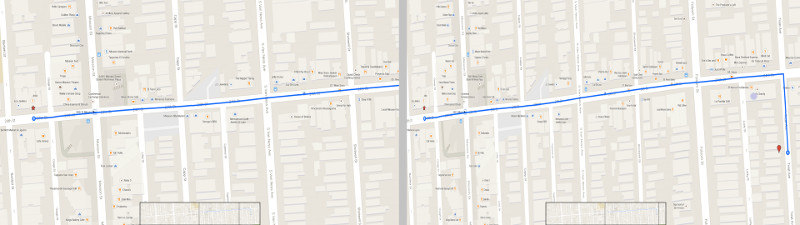

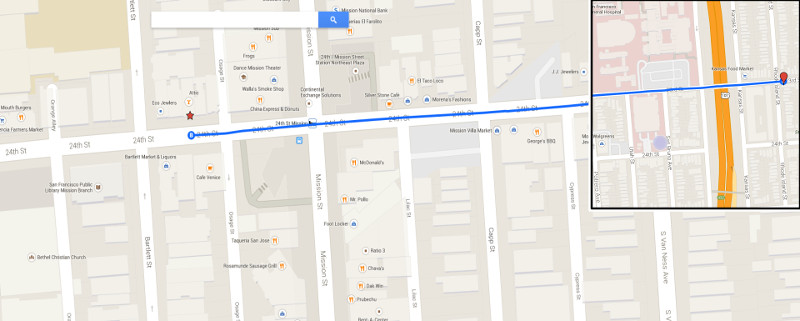

Maps

The left image shows a planned route that is too long for the size of the screen at the current zoom level. The right image shows the same route, but employs the Dynamic Distortion technique to show the entire route without zooming out and losing detailed information.

In this map application, the on-screen section of the information space provides a higher level of detail than the off-screen sections; thus modifying the concept of an overview + detail interface.

Off-Screen Toolbars

System or application specific toolbars can be located off-screen to save screen space, and be brought on screen only when needed, as shown here by using the Dynamic Distortion technique.

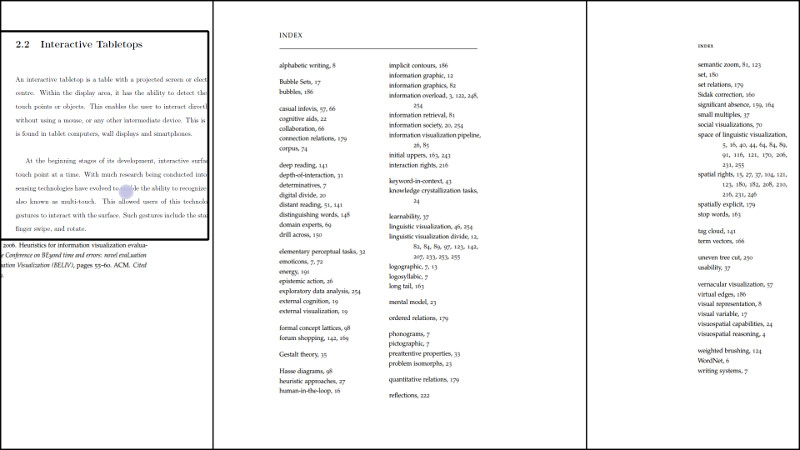

Document Exploration and Navigation

In this application, the Dynamic Peephole Inset technique is being employed to explore a document while maintaining a view of its index page. Therefore, the user does not have to flip back and forth between the index page and the search pages when trying to find the target content.

To evaluate my techniques, I performed a comparative evaluation between three of my spatial off-screen navigation techniques and traditional mouse panning using a 2x2x4 factorial within-subjects study design. Read my master's thesis for more information.